This week-end I tried some experiments to improve the automatic tracking feature.

Currently the tracking is done by comparing "pixel to pixel" the images, trying to locate the best "look-alike" zone.

This has a number of drawbacks, it get lost easily when the object or point of view rotates, when blur happens, luminosity changes, etc. and even in "normal" conditions.

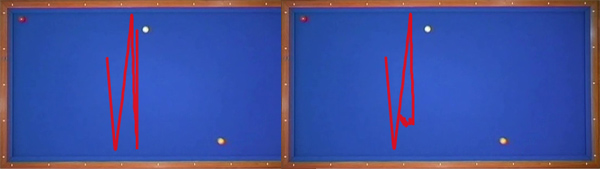

In the following pictures you can see how the program fails on what should be a straightforward task (snooker).

On the left the tracking follows, but it slowly shifts away from the ball and ends up on its side.

On the right, the tracking got lost after a while.

These two were done with very similar set up for the initial point to track.

It looks like this technique is highly dependant on starting conditions and small errors accumulates… ![]()

The new method I'm trying is called SURF. It's a very powerful algorithm, I'm leveraging open source code from the OpenSURF project.

The idea is to describe the initial point and the candidates by a set of 64 descriptors parameters, and then compare the points using the descriptors.

The main advantage is that it is invariant to rotation, blur, etc.

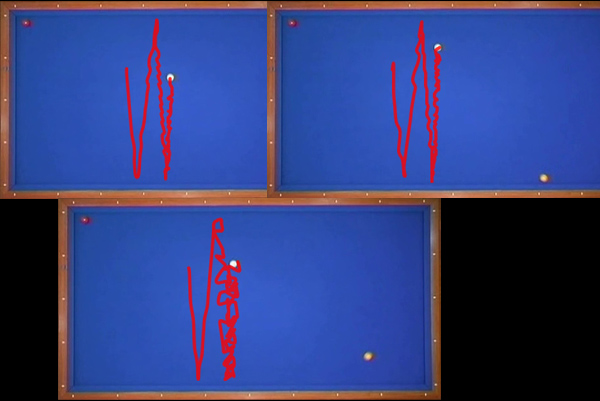

However the way I need to use it makes it really slow compared to the original "template matching", and as you will see I couldn't get it to work in a satisfying way yet ![]()

These are just done with varying some internal parameters.

What happens apparently is that the target attaches itself to the border of the ball, and then finds that the best match is on the other side of the ball !

Maybe it's considering the ball rotation ![]()

It didn't get lost, but the output path in unusable…

So, this is not conclusive and this will need more experimentation to get something working. But I wanted to share these with you anyway.

I'm afraid we'll have to resort to manual tracking for a little longer ![]() .

.